Weights&Biases

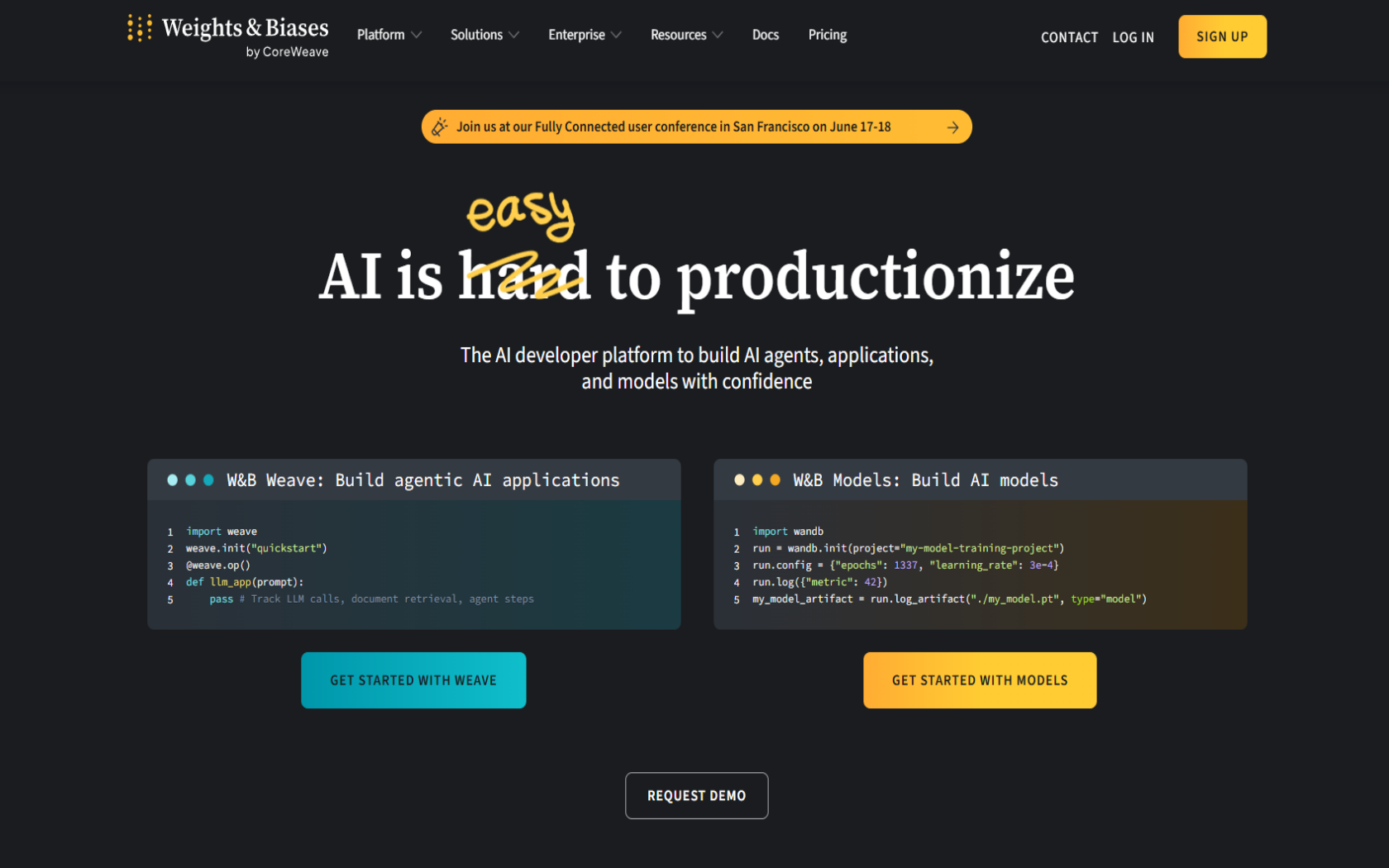

Weights&Biases (W&B) is an experiment tracking and MLOps platform that unifies all aspects of AI development on the web, including experiment logging, model performance management, and hyperparameter tuning.

- Launch Date

- 2017

- Monthly Visitors

- 3.2M

- Country of Origin

- United States

- Platform

- Web

- Language

- Multilingual support for English · Korean · Japanese · and German

Keywords

- Experiment tracking

- hyperparameter tuning

- model registry

- artifact versioning

- LLM evaluation

- Traces

- Weave

- agents

- automation

- MLOps

- LLMOps

- collaboration

- dashboards

- visualization

- alerts/alerts

Platform Description

Core Features

-

Experiments Tracking

Automatically log code, hyperparameters, and metrics to compare between experiments

-

Hyperparameter Tuning (Sweeps)

Automated discovery of optimal experiment configuration parameters

-

Artifact versioning (Artifacts)

Version and reuse models, datasets, and intermediate deliverables

-

Model Registry

Model registration-sharing and metadata-driven search

-

Traces (LLM call traces)

Trace LLM input-output and agent call logs in a tree structure

-

Evaluations (LLM Evaluation)

Benchmark and quantify multiple prompts and model responses

-

Dashboards and Reports (Reports/Tables)

Visualize experiments and generate reports for team collaboration

-

SDKs and Automations

Python/JS SDK-based log automation, alerting, and pipeline triggers

Use Cases

- OpenAI

- Canva

- Microsoft

- Toyota

- AstraZeneca

- Siemens

- SambaNova

- Mercari

- Captur

- Riskfuel

- AWS AI

- Developing agents

- LLM Inference Monitoring

- RAG

- Debugging agents

- Compare model performance

- Collaborative MLOps

How to Use

Initializing a project

Set up experiment tracking

Web dashboard analytics

Model sharing and collaboration

Plans

| Plan | Price | Key Features |

|---|---|---|

| Free(Cloud-hosted) | $0 | • AI application evaluation • AI application tracing • Model experiment tracking • Asset registration and lineage management • AI evaluation score recording • Community support |

| Pro(Cloud-hosted) | $50/mo | • Includes all features in Free • CI/CD automation • Slack and email notifications • Unlimited team collaboration • Team-level access control • Service account support • Prioritized email and chat support |

| Enterprise(Cloud-hosted) | Contact us | • Includes all features of Pro • Sole tenancy with a choice of regions • HIPAA compliance options • Secure connectivity (private connectivity) • User-managed encryption keys • Single sign-on (SSO) • Automatic user provisioning • Custom role management • Audit logs • Enterprise support packages |

| Personal(Privately-hosted) | $0 | • 1 user • Experiment tracking • Registry & Lineage tracking • Can run on local server (based on Docker + Python) * Not available for enterprise use, personal projects only |

| Advanced Enterprise(Privately-hosted) | Contact us | • Includes all features of Personal • Flexible deployment options • HIPAA compliance • Secure connections • User-managed encryption keys • Single sign-on (SSO) • Automatic provisioning of users • Custom role settings • Audit logs • Enterprise-only support package • Local server can be deployed on your own infrastructure (Enterprise license available) |

FAQs

-

W&B is an experiment tracking and MLOps platform for organizing machine learning and generative AI model development.

It helps you automatically record settings, performance results, hyperparameters, code versions, and more during model training and analyze them in real-time on the web. -

Machine learning experiments can yield different results each time, even with the same data and code, so it's important to record and compare exactly what influenced performance.

W&B automates that process to speed up experiment reproducibility, collaboration, and optimization. -

No. W&B is a tool that tracks, analyzes, and records the training code you run, rather than training the model itself.

It works alongside the training process in TensorFlow, PyTorch, etc. to record and visualize the data. -

Answer. W&B is available for free to individual users on the Free plan.

It includes key features like experiment tracking, model performance recording, AI asset management, and community support. However, it does not include team collaboration or advanced security features. -

Pro plans start at $50 per month by default, with pricing scaling based on the number of users and options.

Discounts are available if you choose an annual plan, and Enterprise is adjusted on an annual basis with a customized contract. -

API usage is standard on all plans, and features like experiment tracking, running sweeps, and deploying launches can be used in scripts or automation pipelines.

Pro and Enterprise offer additional configuration options, such as API rate limits and private key management. -

1. After signing up, get an API key from your profile. 2. Run pip install wandb on a terminal or laptop, enter the command 'wandb login', and paste the API key you received to complete the authentication.

-

Experiments can be saved locally when setting up wandb.init(mode="offline") and uploaded later when an internet connection is available, either via the wandb sync command or the automatic synchronization feature.

-

W&B offers 1:1 support at support@wandb.com or via the chat feature at the bottom of the website. Paid plan users (Pro and above) are opened to a prioritized channel, where complex issues are handled directly by an engineer.

⚠ If any information is incorrect or incomplete, please let us know by clicking the button below. We will review and apply corrections promptly.