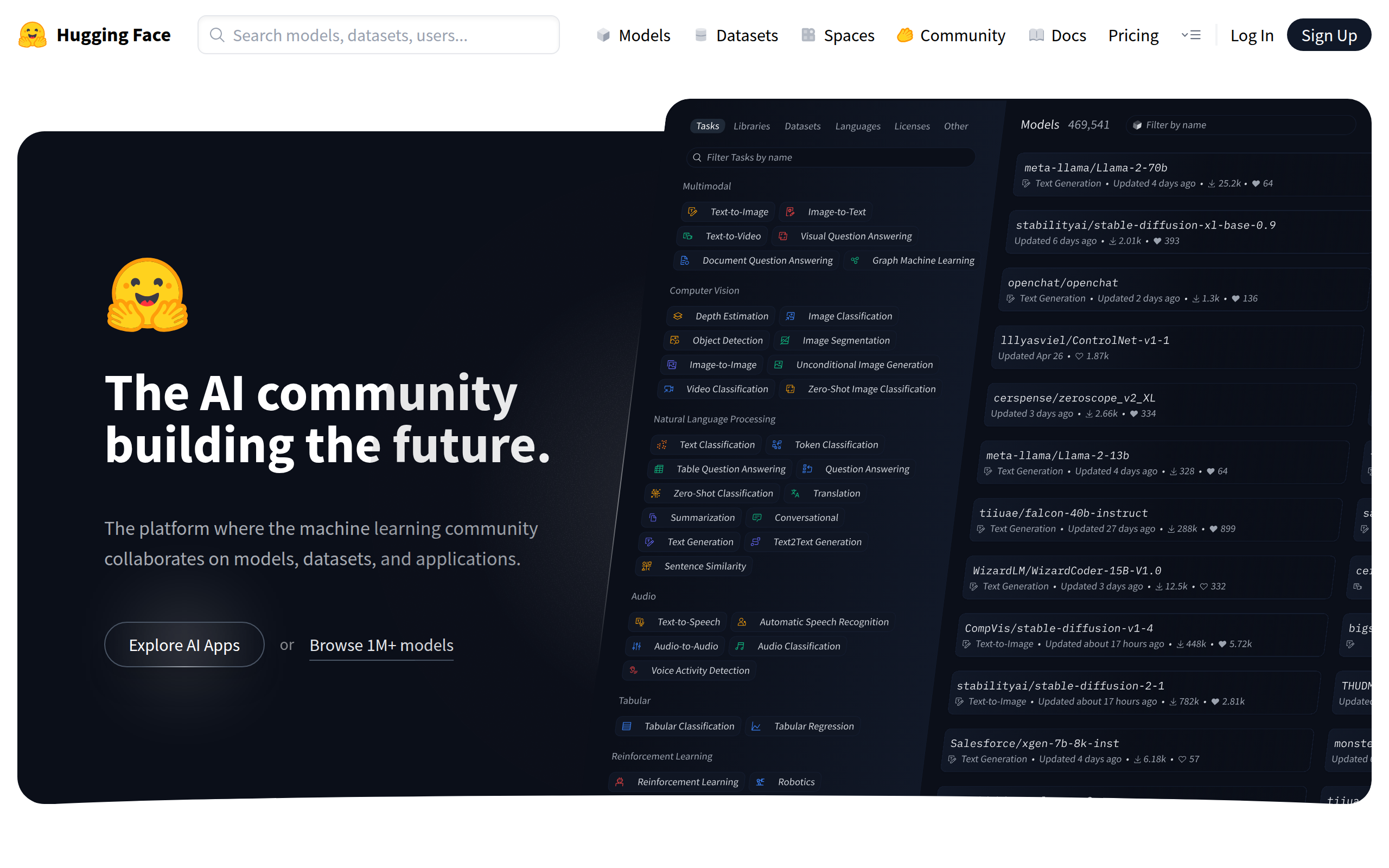

Hugging Face

Hugging Face is an open-source AI development and collaboration platform where hundreds of thousands of AI models and datasets can be shared and deployed as apps.

- Launch Date

- 2016

- Monthly Visitors

- 23.9M

- Country of Origin

- United States

- Platform

- Web

- Language

- English

Keywords

- open source AI community

- machine learning model hub

- natural language processing (NLP) libraries

- Transformers

- dataset sharing

- Diffusers

- Spaces

- AI app hosting

- model training-inference pipelines

- AI collaboration

- AI research and industry standards platforms

Platform Description

Core Features

-

Managing AI repositories with Model Hub

Git-stored, versioned, and shared hundreds of thousands of pre-trained models and datasets, complete with documentation and license metadata

-

Multitask model framework based on Transformers

Provides Python-based libraries for importing and fine-tuning high-performance pre-trained models in a variety of domains, including natural language processing (NLP), computer vision (CV), speech recognition, and more.

-

Spaces app hosting

Launch and share interactive web apps powered by Gradio or Streamlit with a single click, including collaborative editing, custom domain settings, and resource selection (GPU/CPU).

-

Inference API Service

Integrate real-time model inference results into services based on REST APIs

-

AutoTrain automation tool

Automatically train, validate, and deploy with no code, just upload data

-

safetensors 지원

Optimized model loading with a more secure and faster tensor storage format.

-

Dataset management tools

Python libraries optimized for dataset management, including preprocessing, sampling, and subset extraction. Efficiently handle large and multi-language data

-

Automate model performance evaluation

Automatically calculate key metrics such as accuracy, F1 score, BLEU, and more, and support experiments to compare performance across multiple models.

Use Cases

- Categorizing text

- Sentiment analysis

- chatbot

- Documentation summary

- Machine translation

- Recognizing object names

- Answering questions

- Categorizing images

- Object detection

- Speech recognition

- Multimodal integration

- LLM Experiments

- Serving APIs

- Fine-tuning

- Create an AI demo app

How to Use

Sign in

Browse or upload your favorite models, datasets, and apps

Create or clone apps in Spaces to customize them

Run and integrate

Plans

| Plan | Price | Key Features |

|---|---|---|

| HF Hub | $0 | • Host unlimited public models/datasets • Unlimited number of users when creating an organization • Access to the latest ML tools and open source • Community-based support |

| PRO Accoun | $9/mo | • ZeroGPU, Dev Mode available in Spaces • Free credits across Inference Providers • 10x expanded personal storage • Pro user badge to indicate account support status |

| Enterprise Hub | $20/mo | • SSO and SAML support • Choose where your data is stored (Storage Regions) • Deep action review based on audit logs • Set access controls by resource group • Centralize token issuance and approval • Provide a dedicated viewer for private datasets • Offer Spaces high-performance computing option • Allocate 8x more ZeroGPUs to every member of your organization • Deploy Inference on your own infrastructure • Pay annually and customize billing • Priority support |

| Spaces Hardware | $0 (time) | • Free CPU • Enables advanced Spaces app development • 7 optimized hardware options • Scalable from CPU → GPU → Accelerator |

| Inference Endpoints | 0.032 (hour) | • Deploy dedicated inference endpoints in seconds • Operate cost-effectively • Fully-managed autoscaling • Enforce enterprise security |

FAQs

-

Hugging Face is an open-source, AI development and collaboration platform for sharing and utilizing AI models, datasets, and apps. It features the Model Hub, Inference API, AutoTrain, and Spaces.

-

You can train or modify AI models, create Gradio or Streamlit-based apps and deploy them to Spaces, and integrate inference results into your services with the Inference API.

-

Yes, you can. With AutoTrain, you can learn without coding, and with the Transformers library, you can develop custom models in code.

-

Yes. You can view public models/datasets, create public Spaces, and use them for free within limited resources.

-

PRO accounts offer private storage creation, faster execution speeds, higher API call volumes, additional storage space, and more.

-

There is currently no formal certification, but the Hugging Face team is working on a certification program.

-

You can use it for a variety of AI-related practices, including NLP, image analysis, speech processing, chatbot development, creating demos for training, and deploying AI models.

-

Availability varies from model to model depending on the stated license. Be sure to check the license if you want to use it commercially.

-

You can create a private repository on the PRO plan or higher, and you can restrict access to team members or organizational units.

⚠ If any information is incorrect or incomplete, please let us know by clicking the button below. We will review and apply corrections promptly.