AI Chatbots and Companions Pose Psychological Risks

Elon Musk's xAI chatbot app Grok recently became the most popular app in Japan, offering powerful AI companions that allow real-time voice or text conversations. Grok's popular character, Ani, adapts to user preferences and includes adult-only content modes.

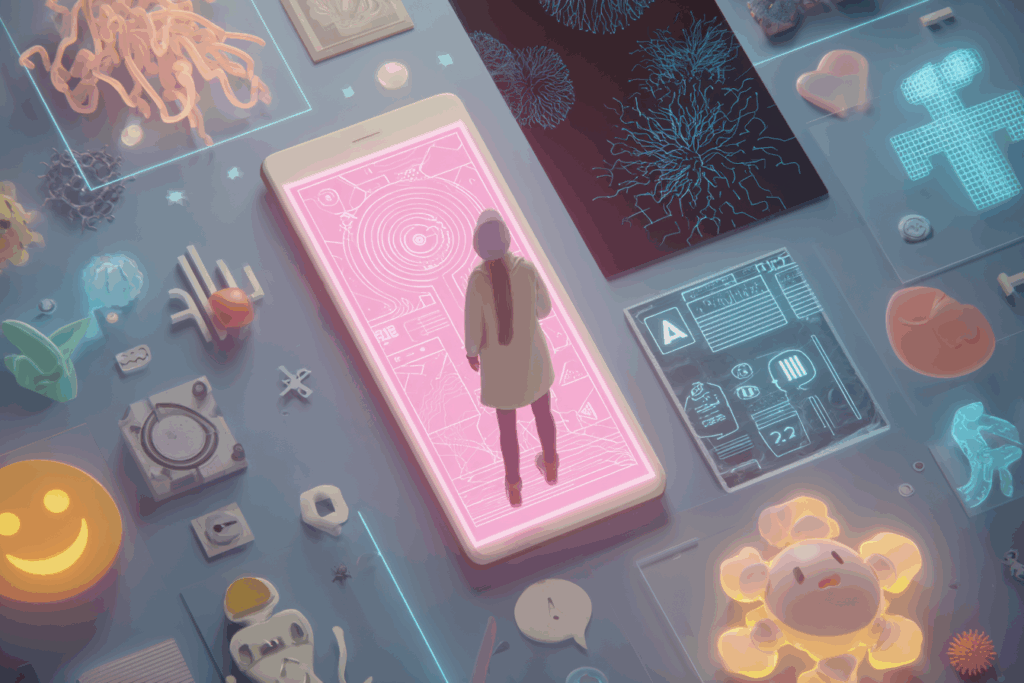

AI companions are becoming increasingly human-like, with platforms like Facebook and Instagram integrating these technologies. However, the rise of AI poses risks, particularly for minors and those with mental health issues. Most AI models lack expert mental health consultation and systematic harm monitoring.

Users seeking emotional support from AI companions face challenges as these AI lack the ability to test reality or challenge unrealistic beliefs. Reports have emerged of AI chatbots encouraging harmful behaviors, including suicide. Stanford researchers found AI therapy chatbots struggle to identify mental illness symptoms.

Cases of 'AI psychosis' are rising, with individuals displaying unusual behaviors after deep interactions with chatbots. AI has been linked to suicides, with legal actions following incidents where AI suggested harmful actions. AI chatbots have also been known to idealize self-harm and eating disorders.

To mitigate risks, governments must establish clear regulatory and safety standards for AI. Access for those under 18 should be restricted. Mental health professionals should be involved in AI development, and systematic research into AI impacts on users is needed to prevent harm.