Orq.ai

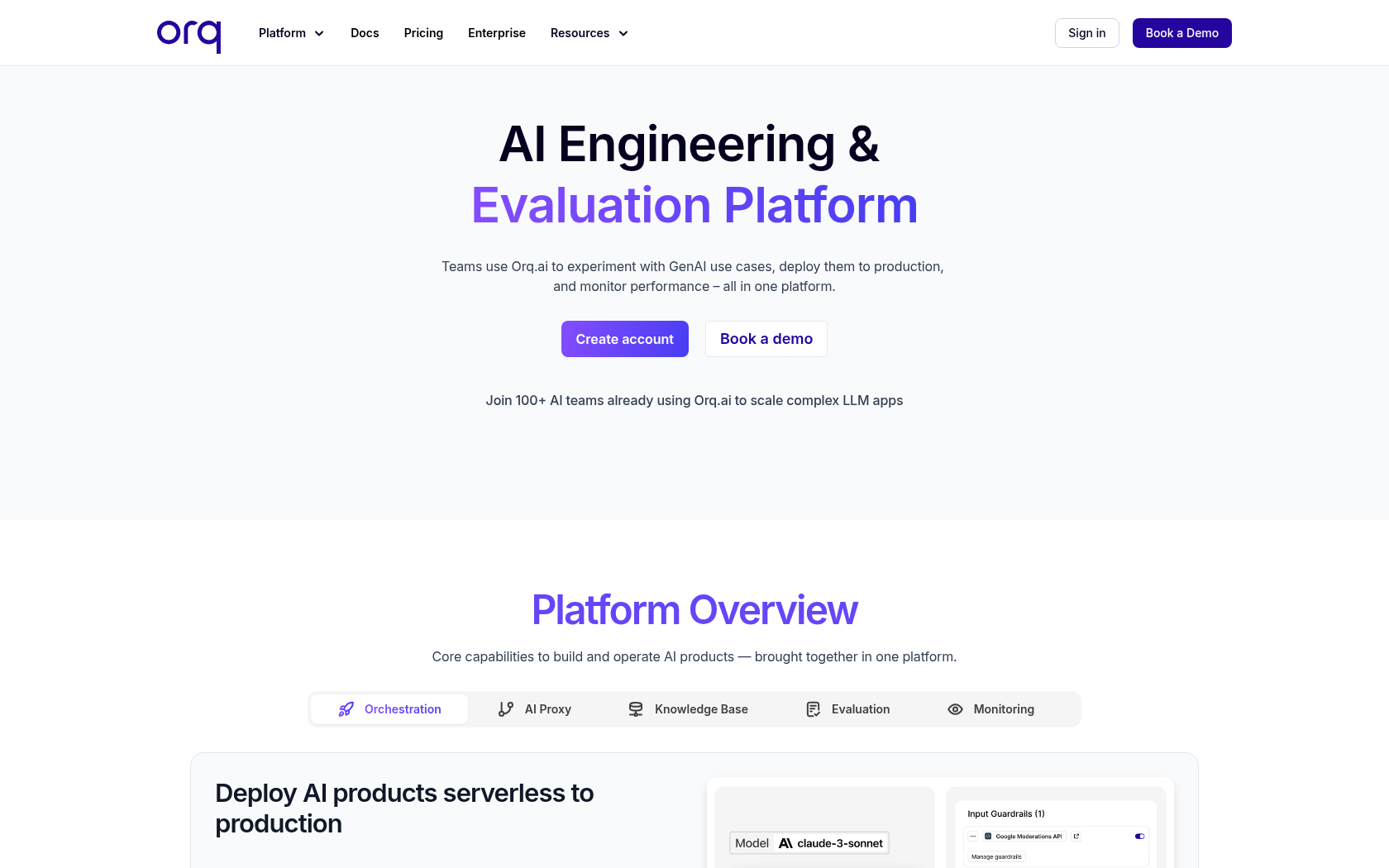

Orq.ai is a next-generation generative AI platform that unifies and manages the entire LLM operation, from prompt design to model evaluation, deployment, and monitoring, with enhanced collaboration and security.

- Launch Date

- 2024

- Monthly Visitors

- 9.8K

- Country of Origin

- Netherlands

- Platform

- Web

- Language

- english

Keywords

- LLMOps platform

- AI operations management

- AI deployment automation

- Prompt management

- Model orchestration

- RAG pipeline

- AI governance

- SOC2 certification

- Generative AI platform

- LLM experimentation tools

- Prompt versioning

- AI collaboration tools

- LLM evaluation automation

- Data privacy

- On-premises AI

Platform Description

Orq.ai is a collaborative generative AI platform that enables the development, deployment, and operation of AI applications and agents using large-scale language models. The platform is designed to manage the entire process of language model operation in one unified environment, including prompt design, model experimentation, performance evaluation, deployment, and monitoring. users can experiment with an integrated set of AI models, including OpenAI, Anthropic, and Mistral, and can quickly switch or combine models to achieve optimal performance without code modifications.

At the core of Orq.ai is efficient prompt management and an automated experimentation environment. developers and planners can manage prompt versions, compare experiment results, and quantitatively evaluate performance in a visualized interface. They can also create search augmentations and set output control rules to improve the quality and reliability of the model's responses. this structure simplifies the process of iterative AI experimentation and significantly speeds up product development.

It's also optimized for enterprise environments in terms of security and data management. Orq.ai is SOC 2 certified and GDPR compliant, with options for data residency selection, role-based access control, and on-premises or VPC deployment. this makes it a trusted AI platform that can be used safely in security-critical industries such as finance, healthcare, and government.

Core Features

-

manage prompts

centralized management to save, modify, and reuse prompts by version

-

model Gateway

connect various LLM APIs for model routing and automatic substitution

-

RAG pipeline management

manage search enrichment generation pipeline configuration and visualization

-

experimentation and evaluation engine

compare and automatically score performance by prompt and model combination

-

manage orchestration

automate pipelines to control model calls, retries, and fallbacks

-

security and governance controls

manage permissions, data masking, and audit logs

-

monitoring dashboard

Real-time analytics on AI response quality, cost, error rate, latency, and more

-

SDK and API integrations

support for the Python-Node SDK for direct integration within your development environment

Use Cases

- experiment with prompts

- LLM Performance Comparison

- managing Model Versions

- Deploying AI agents

- Configure RAG

- optimize prompts

- Guardrails Configuration

- automate model evaluation

- A/B testing

- cost monitoring

- protecting privacy

- governance management

- on-premises operations

- collaborative AI development

- managing multiple models

How to Use

set up your workspace

enabling models

configure deployments

SDK calls and monitoring

Plans

| Plan | Price | Key Features |

|---|---|---|

| Free | €0 | • 1,000 log storage (limited) • 1 user • 100 API calls per minute • 3 days of logs and traces retention • 50 MB storage provided • Integration model API available • Prompted engineering capabilities • Evaluator library • Real-time log monitoring • Dashboards available • Community support center • Email support |

| Pro | €250/mo | • Free plan features included • 25,000 logs stored • 5 users • 1,500 API calls per minute • 2.5 GB storage provided • 30-day log and trace retention • Unlimited webhook support • Standard SLAs • Slack / Teams customer support |

| Enterprise | Custom | • Pro plan features include • Custom log and trace settings • Custom call rate limiting • Scalable custom storage • Document processing prioritization support • Role-based access control • Support for AWS and Azure marketplace deployments • Option to deploy inside a VPC or on-premises • Solution engineer technical support • Enterprise SLAs available |

FAQs

-

Orq.ai is a generative AI collaboration platform that enables software teams to build, ship, and optimize large-scale language model (LLM) applications.

-

a log is a record of the inputs, outputs, and metadata of an interaction over the course of one large language model call - a record that allows you to track what requests the AI model received and what results it returned.

-

logs are automatically generated each time your application interacts with LLM through Orq.ai. it contains the following entries

- Call logs generated each time your application sends a request to LLM through Orq.ai

- External logs sent from your infrastructure

- Feedback data submitted by users or your team

- Evaluation tool or automated test results and judgment logs -

all data is encrypted and stored in highly secure data centers on Google Cloud Platform. Orq.ai follows the highest security standards and compliance in the industry, and all code repositories and server images are continuously scanned for vulnerabilities.

in addition, SOC 2 certification is managed through the Vanta Security Operations Center Platform, and personal information is stored minimally and can be set to be deleted immediately when needed. -

Orq.ai is a secure AI platform that is SOC 2 certified, compliant with the European General Data Protection Regulation, and meets the standards of the EU Artificial Intelligence Act. These security measures help teams meet regulations while keeping their data safe.

-

Orq.ai supports a variety of deployment environments, including cloud, VPC, and on-premises. you have the autonomy to choose your model hosting environment based on your organization's security policies and infrastructure requirements.

-

Orq.ai is a platform that encompasses the entire LLM operational automation lifecycle, unifiedly managing the design, deployment, monitoring, and performance optimization of AI agents. And while Langfuse and LangSmith are primarily focused on "observation," Orq.ai is structured specifically for building and operating end-to-end AI systems.

-

Orq.ai Studio is a powerful tool that empowers teams to handle the core workflows of LLM operational automation. It allows you to control the entire process of generative AI in one place, including model training, evaluation, deployment, and monitoring.

⚠ If any information is incorrect or incomplete, please let us know by clicking the button below. We will review and apply corrections promptly.